Evading Sandboxes and Antivirus Through Payload Splitting

Malware has been using the Temporary Internet Files folder

structure as a launching point for the past 20 years, but from an offensive

standpoint I haven’t seen too much else that leverages the quirks and

functionality it can provide. A few

weeks back during an engagement I was on, I noticed the wide variety of filetypes

present in the folder structure that appeared to be directly downloaded from the internet

and were in no way were obfuscated, compressed, or restricted. Due to a few

other projects I was working on at the time, I started thinking to myself about

the potential implications of this, as well as the limits to which it could be

taken. The result of this research was

the discovery of a technique of splitting payloads to evade antivirus and

sandboxes, as well as provide a potential new method for payload encryption /

environmental keying.

As a part of penetration tests I find myself more often hosting

payloads on a third-party site and then sending a link to the site in the phish,

versus simply including the payload as an email attachment. This is due in a large part to the numerous

steps taken by organizations in recent years to restrict and inspect the files

entering their network in this manner. However,

as the end user is now visiting a site I control as part of the phish, this

provides a new opportunity to transparently download code onto their system in

the Temporary Internet Files folder structure via an I-frame, as well as

deliver a traditional payload. We can

then code that payload to not execute anything malicious itself, but rather

search the local file system and execute instructions / compile from the code

located in the user’s temporary internet files.

This technique can evade antivirus as on their own neither file is

considered to be malicious, and evades sandboxes as the appliance will not have

visited the same page the user did, and thus will not have a copy of the code

pulled via the I-frame. Below, I discuss

an in-depth walkthrough of the setup and operation of this vector.

A Background on

Temporary Internet Files and Caching

‘Temporary Internet Files’ (INetCache in Win10) is a user-specific

folder located in %userprofile% \appdata\local\microsoft\windows which acts as

the repository for files download while browsing the web with Internet Explorer

(Edge uses a similar method for temporary file storage, but has a separate directory

structure). Although these files appear

to be in a single folder when browsed through the GUI and browser, in reality

they exist in a variety of randomly named, system-generated folders that lie

several directories deeper in the folder structure. Files stored in this structure are cached to

decrease required network demand and allow sites to load more quickly. Chrome and Firefox store their temporary internet files in a compressed format, making them less accessible than those downloaded through IE.

The server typically controls caching, and as we will see

later it can set varying lifetimes for resources before the client requests

them again. This makes sense as some resources

(such as a corporate logo or a video embedded on a website) rarely change, and

thus can be downloaded periodically rather than every time the site is

loaded. However, this means that the

client is downloading code to their local disk from a remote location without

any prompts or warnings to the end user.

This by itself does not represent a security risk, and clicking through

to accept a huge number download requests on every site you visit would get old

extremely quickly. Rather, it is the way

that IE and Edge cache flat files in a (relatively) easily findable location

that initially caught my attention, as I found I could coerce a download of a

file from the server to the client, and subsequently access a fully readable

copy sitting in the previously mentioned folder structure on the client’s

system.

Setting up Apache

In order to get files downloaded onto client systems connecting

to us we first need to set up our server to add the requisite headers to our

traffic. Luckily, Apache has pre-built

modules that helip us do exactly what we need.

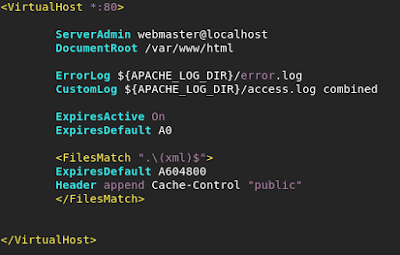

Using a standard Ubuntu / Debian box we enable mod_headers and

mod_expires (through a2enmod headers & a2enmod expires, respectively). From there we modify our virtual host file (in

/etc/apache2/sites-available/) to include the necessary rules (in this example

we’ll be using a .xml file to host code to be compiled on the client system):

Really all this does is say that any .xml file that is

served should have a cache-control header set on it, with an expiration of

604800 seconds (one week) from when it is downloaded. This means that if the browser attempts to

access the site again, it will perform a delta on the timestamp on the initial

file and if it is less than one week old, will not request an updated version

of the file from the server. Performing

a curl of a resource with cache control set up for it and comparing against one

that does not (such as a .html file) shows us that our configured rules are

working as intended:

Building Hosted Files

and the Landing Page

Before we can configure our landing page we need to set up a

hosted file that will be dropped onto the client’s system and determine what we

want it to do. IE is typically pretty

open with the types of files it will automatically download, and I’ve had

success with a variety of file extensions (.vbs, .ps1, .xml, etc.). However, in our example we’ll be using an

MSBuild-compatible .xml stager that contains C# source code, which when built

will in turn grab a second-stage assembly from a remote webserver and execute

it in memory. An example of the general

outline of this stager code can be found here: https://gist.github.com/G0ldenGunSec/62b8166c23573fc64c6eeb29e8c5b818

We’ll run this code through VT and make sure we’re not going to get picked up

immediately:

We’ll next need to create a payload that the user will

download to begin the execution chain.

For this example, we’ll use a basic .hta file that contains some

vbscript code which searches for our file within the known Temporary Internet

Files directory structure, and will use msbuild to compile & run our source

code if it is found. In practice, this

could be any of a wide variety of payloads already utilized in traditional

phishing attacks, but with the added benefit of splitting code to further evade

detection. One important thing to note, as

we’re searching based on the name of our .xml file written to disk, using a

unique or sufficiently long randomized string is recommended.

Now that we have our hosted files set up, we can move into building

the actual server-side infrastructure of a landing page that will host them. In our

example we have an extremely simple page that hosts a file download and also

contains the hidden I-frame that loads our .xml payload file, which if

configured correctly should cause the c# source code hosted in the .xml file to

be downloaded to the client system.

|

In a real-world scenario I would likely include the I-frame

on the initial landing page (ex. page requiring a user login to access a secure

email) and host the actual file download on a separate page. This can also be accomplished through the

usage of an html redirect on the landing page.

However, this will be all we need for a demo, and we should now have our

server ready to go and deliver both our source code files as well as our selected

payload.

Putting It Together

Now that we have an understanding of the process behind the

attack, we’ll run through an example demoing the full execution chain on a fully patched Win 10 box, to see how

we can gain execution of an arbitrary C# assembly hosted on an external website

from our initial HTA download. Lets first browse out to the web page we set up on our server (pretending that we received a phishing link directing us to this site):

Cool, nothing too crazy going on right now on the web console, I see the link to the download of the .HTA file we'll use for first-stage execution, but that's about it. Lets take a look and see if our I-Frame functioned as intended to download the linked .xml file to disk:

Looks like it was successfully downloaded, now lets quickly just validate our code is actually in there:

So we now have our c# code sitting in a .xml in a fairly easy-to-find spot on the disk, lets execute our .hta payload from the website and see what happens:

Awesome, we got code execution from our second-stage assembly that was hosted on a remote server.

Awesome, we got code execution from our second-stage assembly that was hosted on a remote server.

Concerns and

Additional Uses

In the process of researching this I stumbled upon several

items that I wanted to mention in addition to the walkthrough given above. First, several of my colleagues raised the

extremely valid concern of browser compatibility. After all, it is not a guarantee that users

will visit your website with IE, and may instead be using Chrome, Firefox,

Edge, etc. The best answer here lies

with Apache’s mod_rewrite functionality.

An inspection of the connecting user agent will allow your server to

determine which payload to serve, and to either redirect those connecting with

non-compatible browsers to either a splash page saying the site is only

viewable in IE, or to present them with a different payload not dependent on

this technique. It is also worth mentioning

that this technique is fully compatible with the Edge browser (if anyone happened to be using it), but

that as it uses a separate directory structure from IE, unique payloads will

need be created or a single payload that searches both trees will need to be

built.

Secondly, a topic that was not touched on but may also be of

interest is the applicability of this technique to payload encryption and environmental

keying. Rather than an iframe coercing a file download containing code to

execute, it could simply contain a decryption key. As this file would only be present on the

system that browsed to the site containing the I-frame, the payload could not

be decrypted elsewhere, even by another system on the same domain, or logged

into by the same user. The encrypted

payload would perform a function similar to the vbscript payload shown above,

in that it would simply search for a file with a specific, pre-determined name

and attempt to extract the extract the decryption key. If successful the primary payload would

decrypt and execute.

Finally, although all demos were done on an internal lab

range, the process has been tested repeatedly over the internet from several

cloud-hosted boxes with success on all stages of the process.

Conclusion

This was a very simple example of a payload that could be

built from two separate files and combined into an effective attack vector. I’m sure that there are way cooler ways to

utilize this and make more effective payloads, from something as simple as

scripting cleanup of the initial stager files from the disk upon successful

execution, to payload encryption and staging of complex project through

multiple files dropped to disk.

Comments

Post a Comment